AGV (Automated Guided Vehicle) Multi-sensor Fusion for Real-time Obstacle Avoidance Technology

I Introduction of AGV Multi-sensor Fusion Real-time Obstacle Avoidance System

1. Introduction

Sensor fusion technology is the key for robots to achieve full-coverage obstacle avoidance. Its principle is modelled on the way the human brain integratively processes information: by coordinating multiple sensors (e.g., LIDAR, vision cameras, etc.) for multi-level and multi-dimensional information integration, it makes up for the limitations of a single sensor, and ultimately builds up a consistent perception of the environment. This technology integrates the complementary advantages of multi-source data (e.g., accurate distance measurement and object recognition) and optimises the information processing flow through intelligent algorithms, so that AGVs can simultaneously improve obstacle avoidance accuracy and environmental adaptability in complex dynamic environments.

2. Improve Detection Accuracy

Integration of LiDAR (accurate distance measurement, but glare is easy to interfere), vision (identification of object type, low light is limited) and ultrasonic (blind zone detection at close range) and other multi-sensor data, complementary shortcomings, to enhance the accuracy of obstacle identification.

3. Enhanced System Reliability

Redundant design ensures that when a single sensor fails (e.g. LIDAR failure), other sensors can still maintain obstacle avoidance; combined with Kalman filtering and other algorithms, noise interference is filtered out to improve data stability.

4. Extended Environmental Adaptability

Dynamic switching advantageous sensors to deal with complex scenes, such as electromagnetic interference when the choice of anti-jamming data, smoke environment fusion of ultrasonic and LiDAR, and for transparent/overhanging obstacles to enable infrared and other special sensors.

5. Optimise Obstacle Avoidance Decision Making

Through multi-sensor partition sensing (e.g. delineating obstacle avoidance/detour zones in front), integrating obstacle distance (LIDAR), type (vision) and proximity information (ultrasonic), generating a global environment model, and accurately planning the optimal path.

II Multi-sensor Fusion Obstacle Avoidance Principle

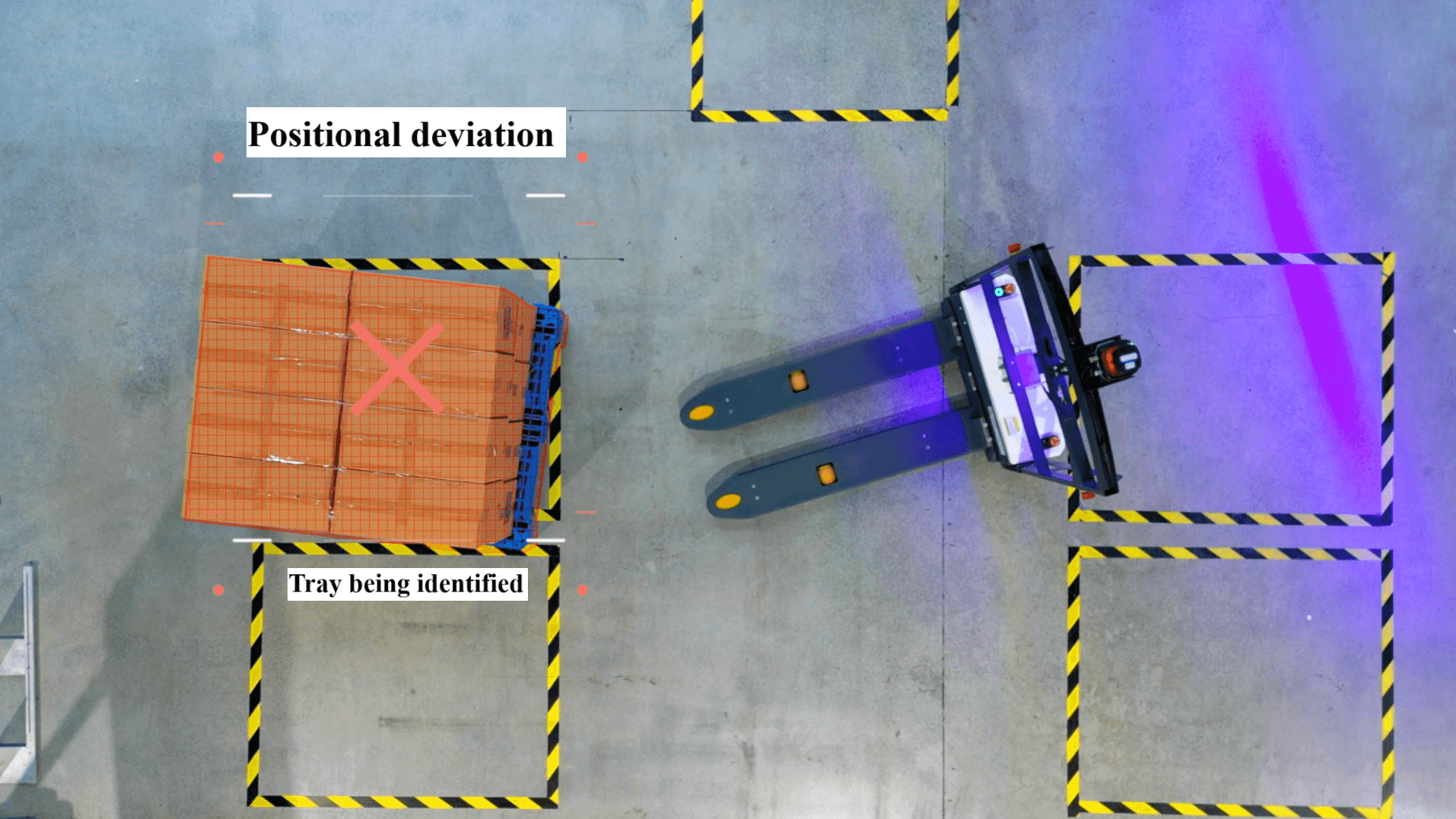

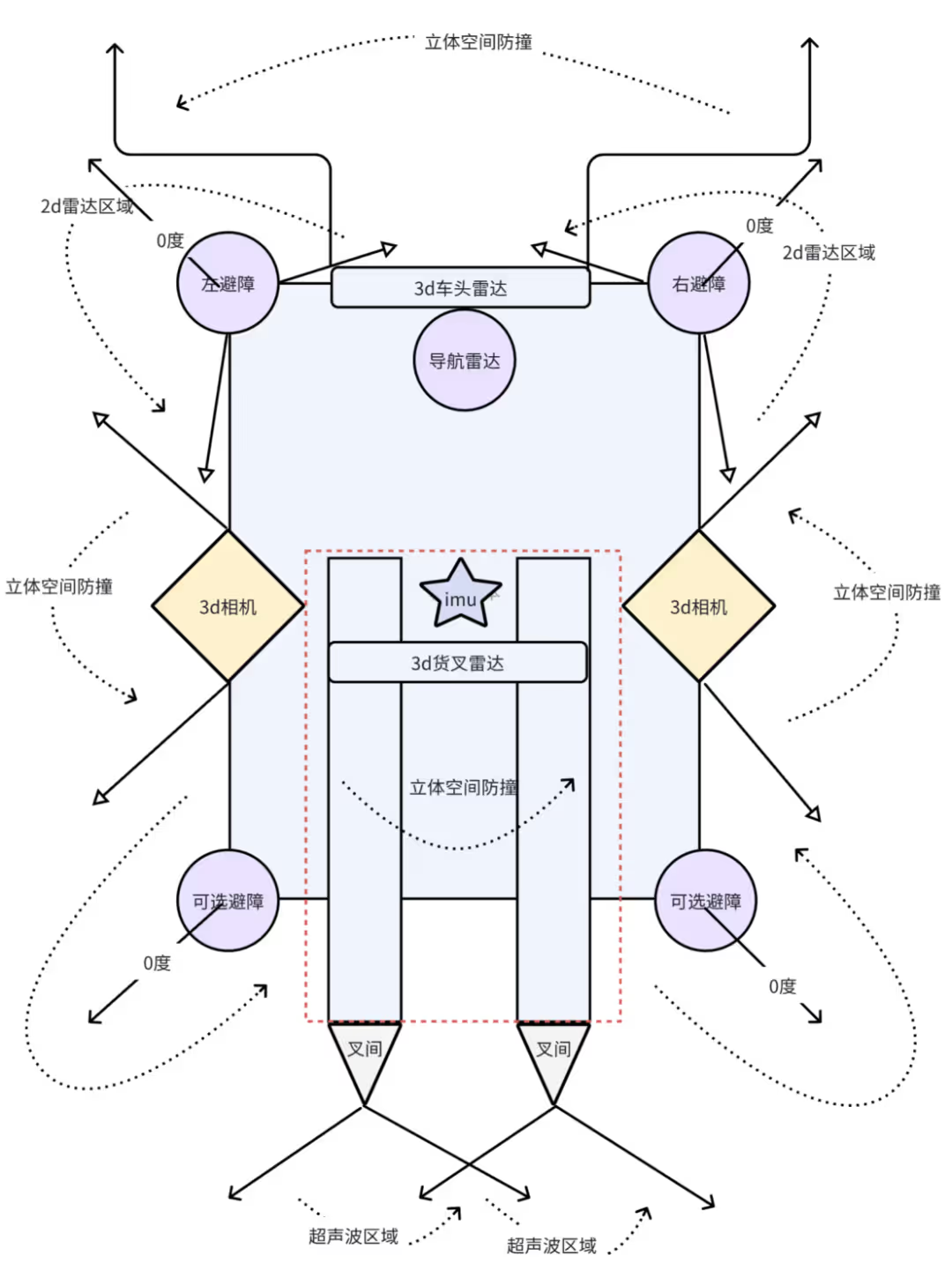

1. Typical Layout

- Front main obstacle avoidance: Left and right 2D laser scanning obstacles horizontally, bottom ultrasonic supplementing low obstacle detection, front upper oblique 3D laser covering three-dimensional space.

- Lateral Protection: Slant-mounted depth cameras on both sides to eliminate the lateral blind spot of AGV.

- Fork collision avoidance: The fork is equipped with an IMU to monitor the attitude in real time, combined with the upper and lower 3D laser data to dynamically predict the fork trajectory and protect the surrounding area.

- Inter-fork collision avoidance: Dual ultrasonic sensors monitor the obstacles in the sector area on both sides of the rear of the vehicle.

2. Fusion Methods

- Data level: Unify multi-sensor timestamps and coordinate systems, and merge point cloud data directly.

- Feature level: Fusion of LiDAR edge features with visual SIFT features, deep learning using PointNet++ (point cloud) and CNN (image), or generating obstacle probability maps via EKF.

- Decision level: Bayesian network dynamically weights the confidence of each sensor, ultrasonic triggers emergency stops in emergency scenarios, and LiDAR plans diversion paths.

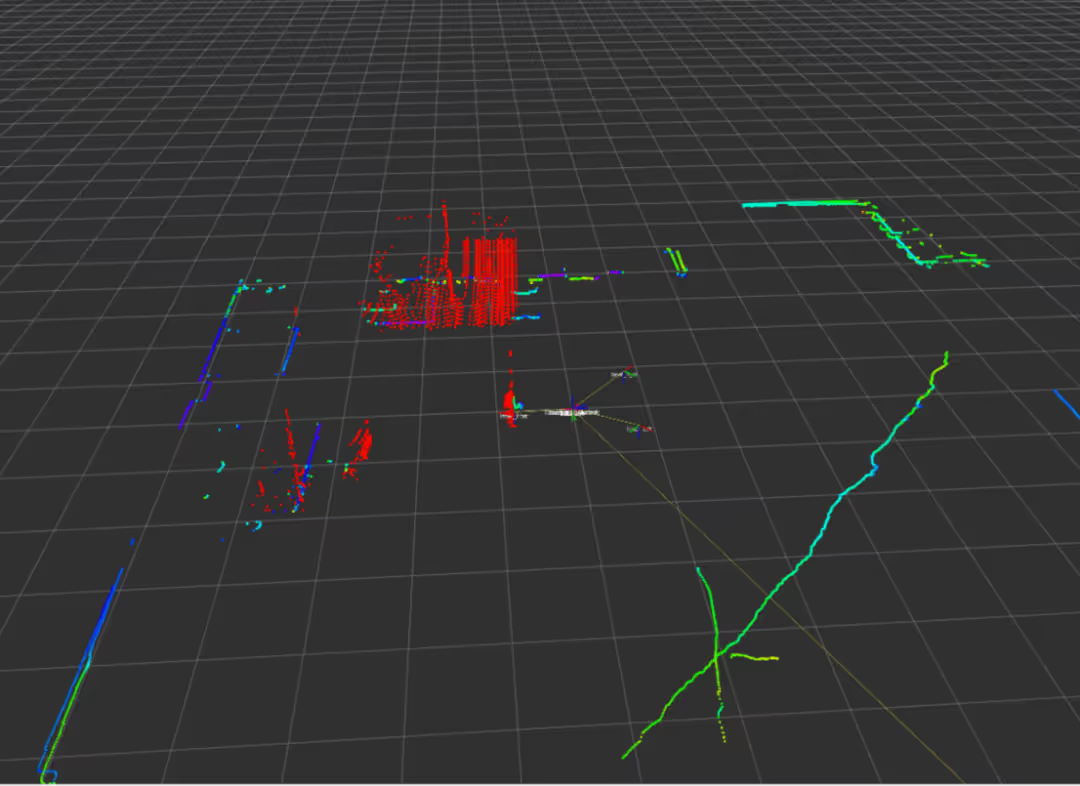

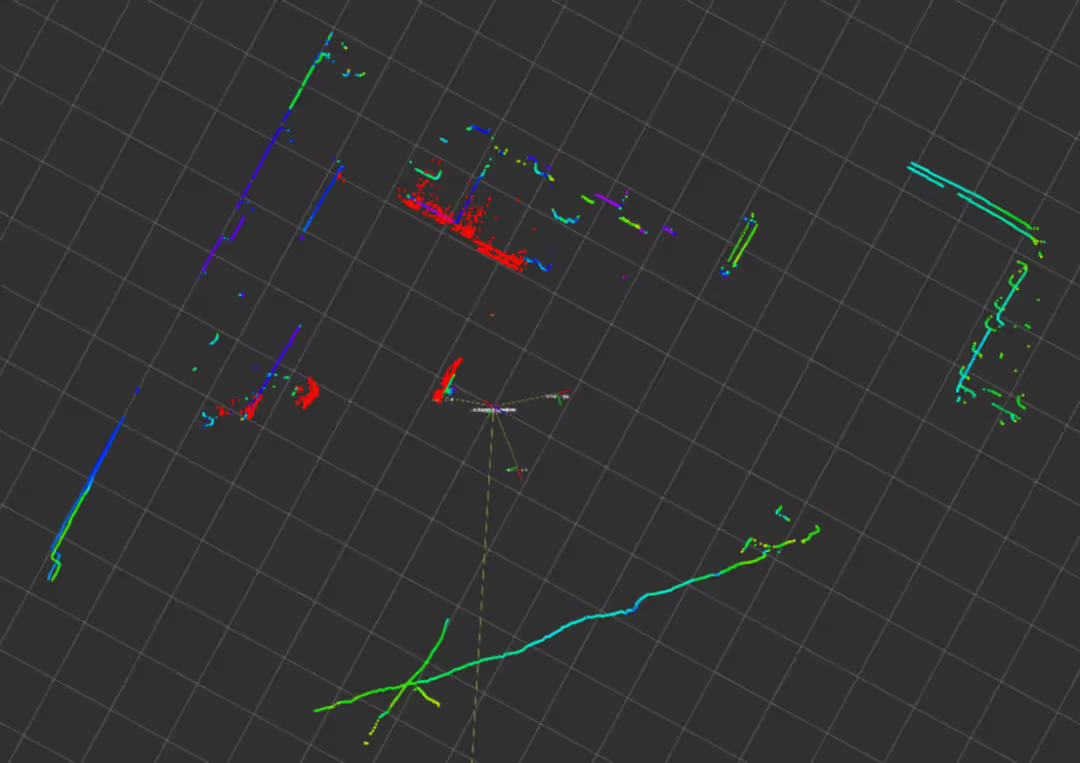

3. Environment Sensing

- Distant and near division of labour: 3D radar point cloud detects global obstacles at the far end, and depth camera identifies local obstacles at the near end.

- Obstacle definition: including people, goods, forklifts and other objects with volume, the core process is ‘detection → tracking → localisation’ (confirmation of existence → trajectory prediction → distance calculation).

- Semantic map: labelling obstacle categories (e.g. shelves, lifts) through instance segmentation, extracting contours and mapping them to the map to support intelligent obstacle avoidance decision-making.

- Global Obstacle Avoidance Process: For the problem of large and noisy point cloud data, filtering and downsampling are carried out first; after segmenting the ground point cloud, the ground obstacle clusters are segmented by clustering algorithm, and attributes such as centre/dimension are fitted to the enclosing frame; combined with the point cloud target detection framework (e.g., PointPillar), semantic annotation and tracking are carried out, and a Kalman filter is constructed to smooth out the trajectory, and simultaneous optimization of arithmetic is performed to guarantee real-time processing; compensation and correction are required when motion distortion exists. real-time; compensation correction is required when motion distortion exists.

4. Real-time Obstacle Avoidance Algorithm

- Local Obstacle Avoidance: Depth camera covers the near-peripheral area of the body, fork IMU provides real-time feedback of attitude angle, and bottom sensor monitors spatial obstacles.

- Path re-planning: based on AGV speed (100- 200ms cycle), sampling feasible trajectory through dynamic window method, predicting dynamic obstacle movement trend, real-time optimisation path.

- Reinforcement learning assistance: combined with DQN, PPO and other algorithms, trains the AGV to adapt to the complex dynamic scene in the simulation environment to improve the autonomous decision-making ability.

III Multi-sensor Fusion Obstacle Avoidance Challenges and Future

1. AGV Obstacle Avoidance Application Scenarios

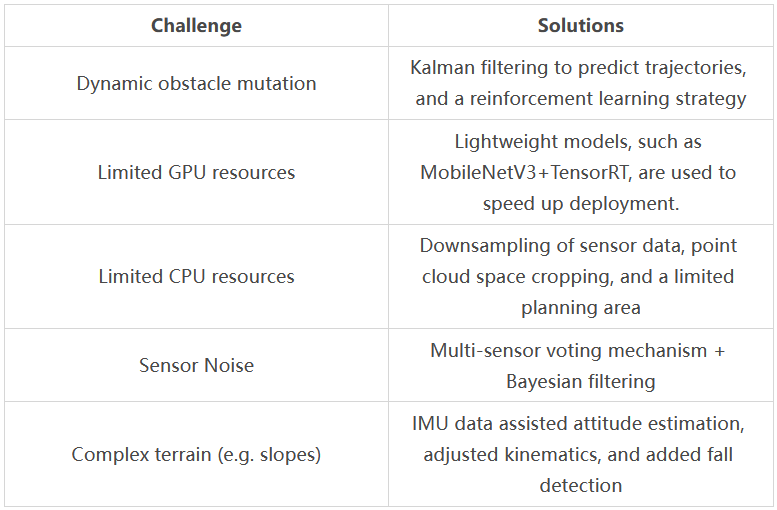

AGV obstacle avoidance practical application scenarios often encounter some difficulties. The following list of challenges and solutions:

2. Future Directions

- Bionic strategies: mimic ant colony/bird swarm behaviour to predict dynamic obstacle trajectories.

- Neural fusion: end-to-end models (e.g. PointNet+++Transformer) to directly process LiDAR and vision data.

- Brain-like architectures: impulse neural networks (SNNs) for low-power decision-making, LSTM+attention mechanisms for predicting long time-series obstacle motion.

- Co-computing: cloud-edge-end layered processing to reduce on-board arithmetic pressure.

- Simulation migration: domain randomisation for enhanced generalisation, online adaptive real-time fine-tuning of models (e.g. Meta-RL).

- Population intelligence: federated learning to optimise multi-AGV paths, game theory to dynamically coordinate right-of-way.

- Goal: To build an intelligent system of ‘sensing-decision-control’, through bio-inspired algorithms, cross-domain collaboration (V2X/digital twin) and energy-efficient hardware, to realise human-like driving capabilities in complex environments, taking into account safety, efficiency and ethics.

Subscribe to AiTEN Robotics for more technical content.

_%E7%94%BB%E6%9D%BF%201.avif)